Welcome to “Part III” of our analysis of this year’s CAT4 results!

In Part I, we provided a lot of background context and shared out the simple results of how we did this year. In Part II, we began sharing comparative data, focusing on snapshots of the same cohort (the same children) over time. Remember that it is complicated because of four factors:

- We only began taking the CAT*4 at this window of time in 2019 in Grades 3-8.

- We did NOT take the CAT*4 in 2020 due to COVID.

- We only took the CAT*4 in Grades 5-8 in 2021.

- We resumed taking the CAT*4 in Grades 3-8 in 2022.

In the future, that part (“Part II”) of the analysis will only grow more robust and meaningful. We also provided targeted analysis based on cohort data.

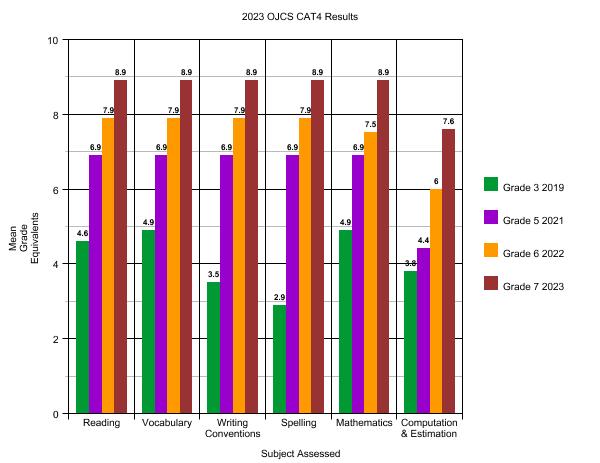

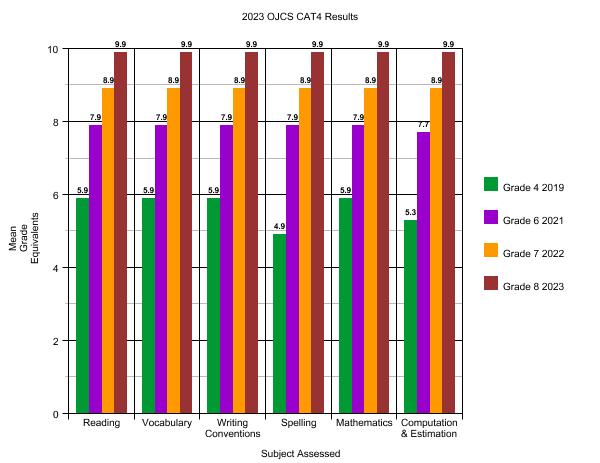

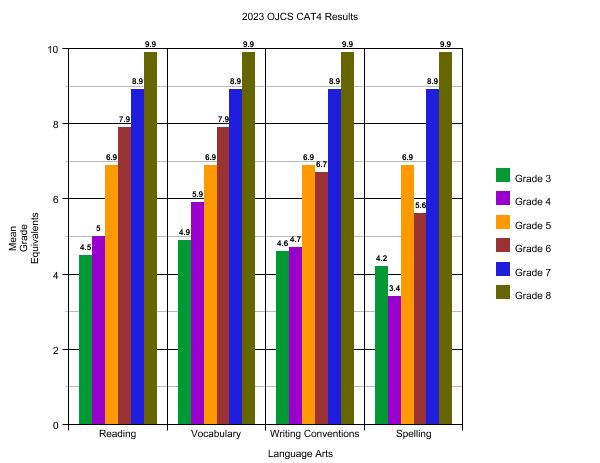

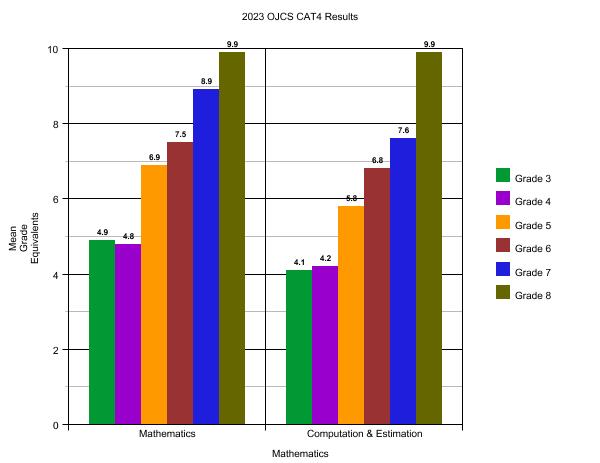

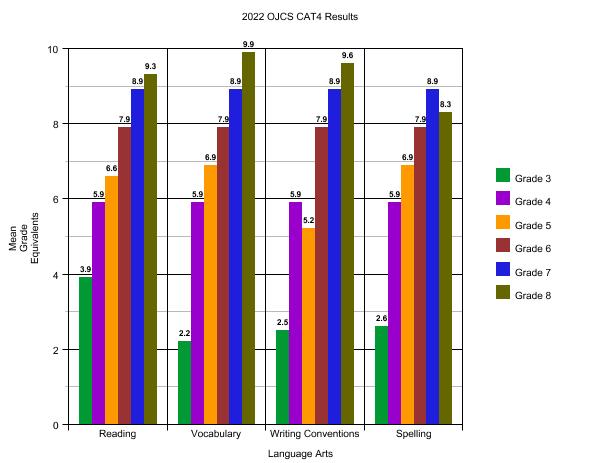

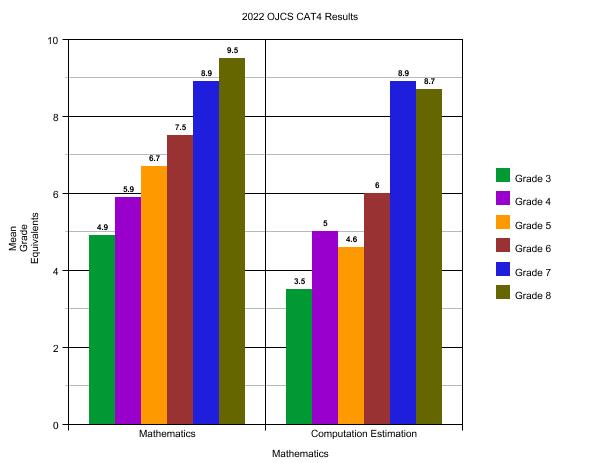

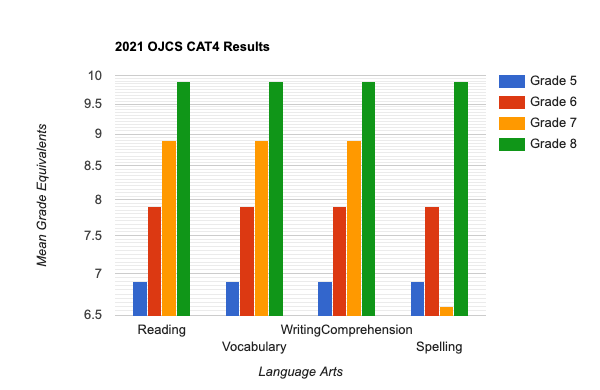

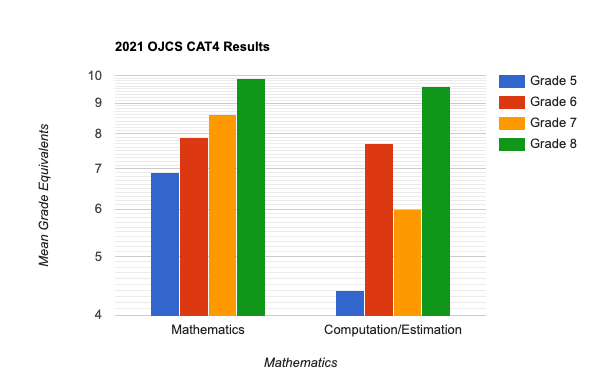

Here, in Part III, we will finish sharing comparative data, this time focusing on snapshots of the same grade (different groups of children). Because it is really hard to identify trends while factoring in skipped years and seismic issues, unlike in Part II where we went back to 2019 for comparative purposes, we are only going focus on four grades that have multiyear comparative data post-COVID: Grades 5-8 from 2021, 2022, and 2023.

Here is a little analysis that will apply to all four snapshots:

- Remember that any score that is two grades above ending in “.9” represents the max score, like getting a “6.9” for Grade 5.

- Bear in mind, that the metric we are normally looking at when it comes to comparing a grade is either stability (if the baseline was appropriately high) or incremental growth (if the baseline was lower than desired and and the school responded with a program or intervention in response).

- In 2023 we took it in the “.1” of the school year and in all prior years in the “.2”. For the purposes of this analysis, I am to give or take “.1”.

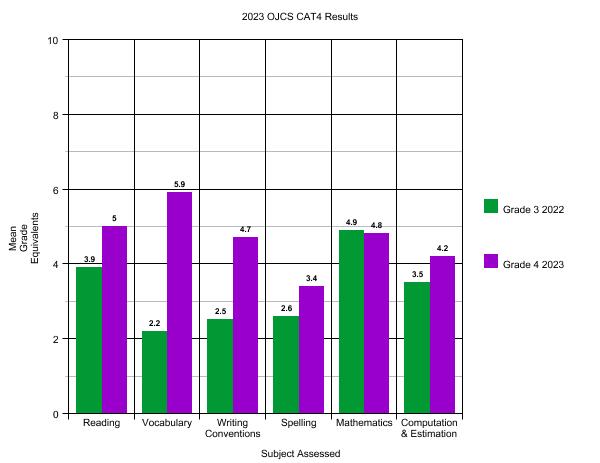

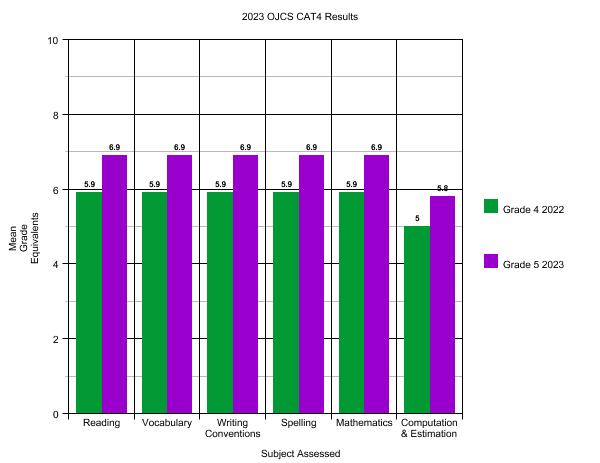

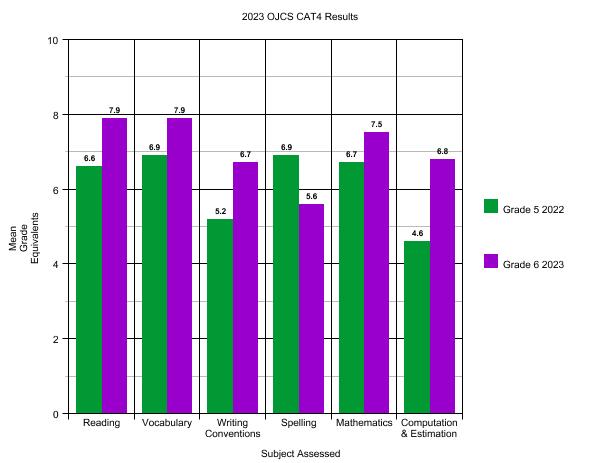

Here are the grade snapshots:

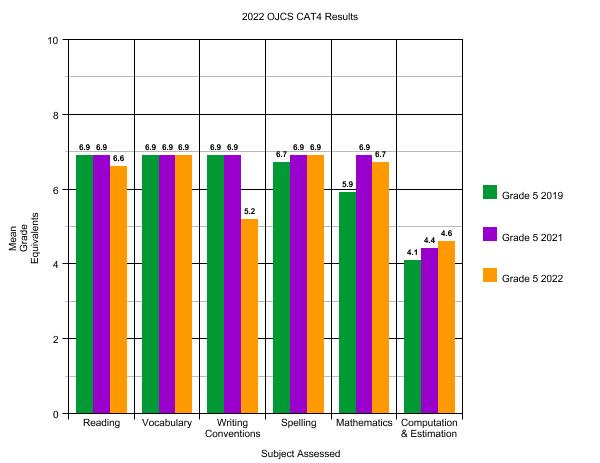

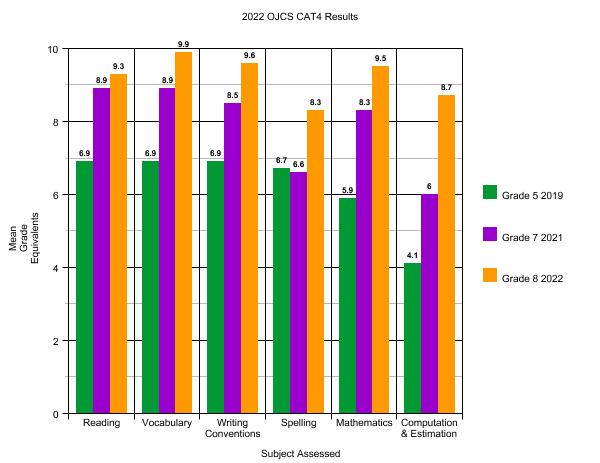

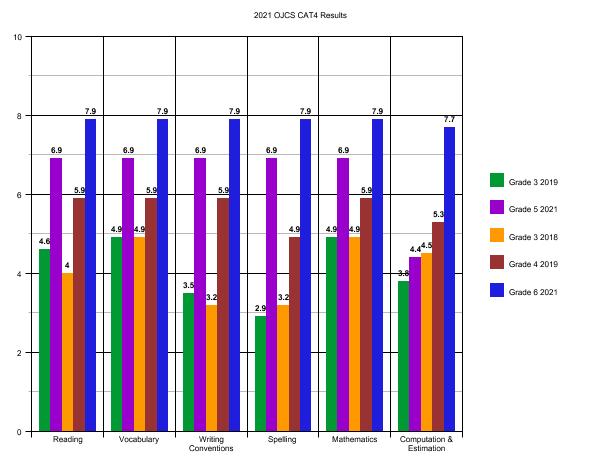

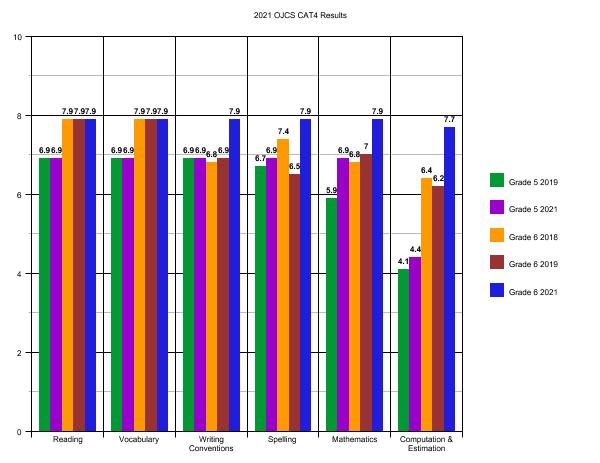

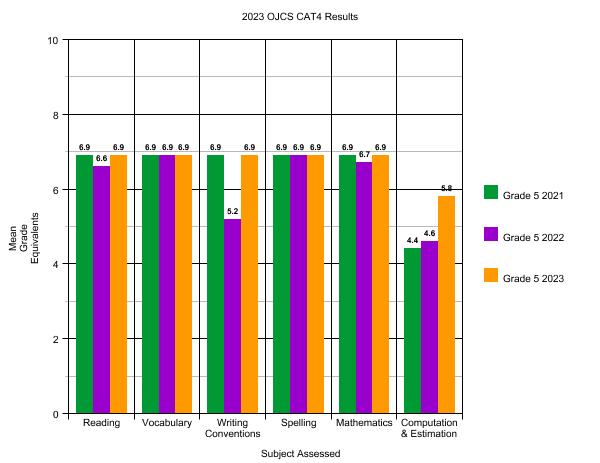

What can we learn from Grade 5 over time?

- Remember these are different children taking this test in Grade 5. So even though, say, for “Writing Conventions” in 2022 they “only” scored at grade level and the other two years it maxxed out, you cannot necessarily conclude that something was amiss in Grade 5 in 2022. [You could – and I did – confirm that by referring back to Part II and checking that cohort’s growth over time.]

- What we are mostly seeing here is stability at the high end, which is exactly what we hope to see.

- Now what might constitute a trend is what we see in “Computation & Estimation” where we began below grade level, have worked hard to institute changes to our program and find a trajectory upwards.

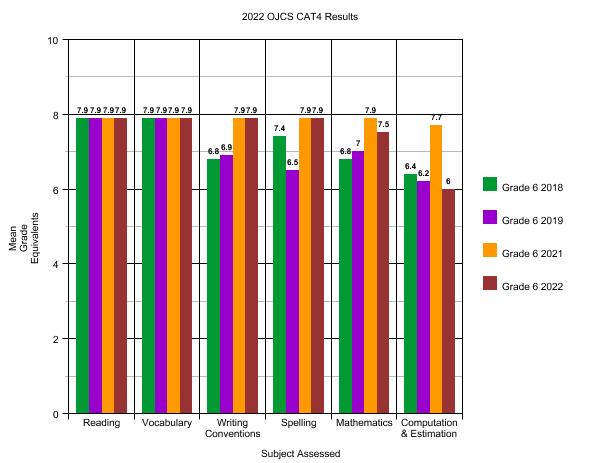

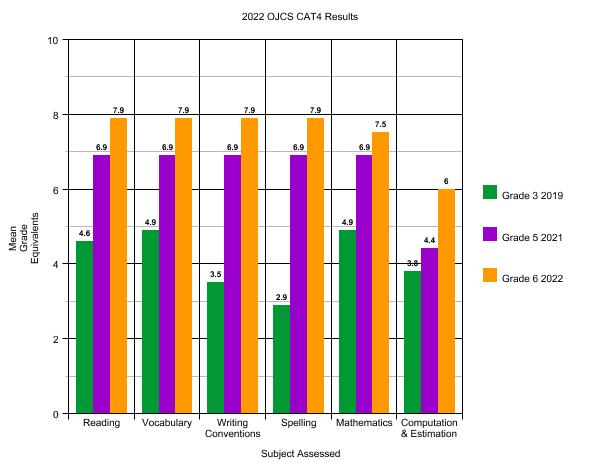

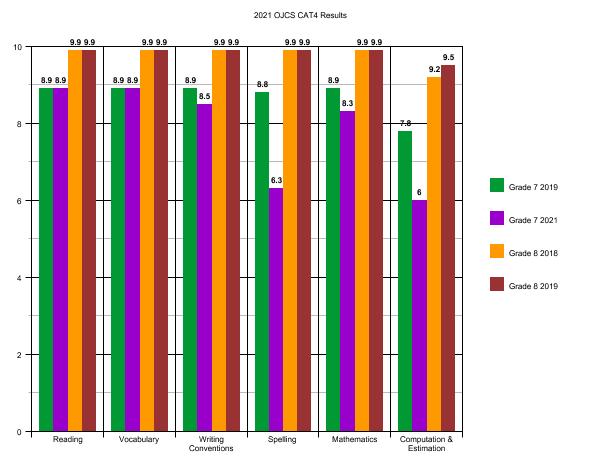

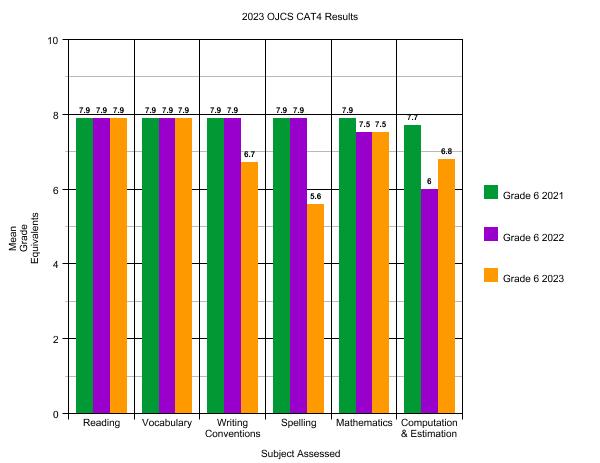

What can we learn from Grade 6 over time?

- Again, because these are different children, we have to be careful, but it will be worth paying attention to “Writing Conventions” and “Spelling” to make sure that that this a cohort anomaly and not a grade trend.

- We will also be looking for greater stability in “Computation & Estimation”.

- Overall, however, high scores and stability for Grade 6.

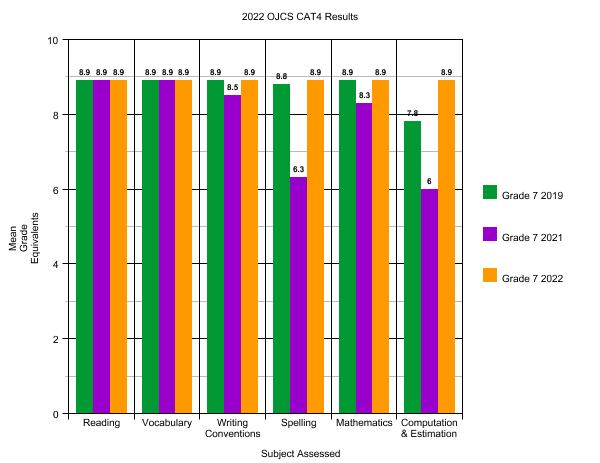

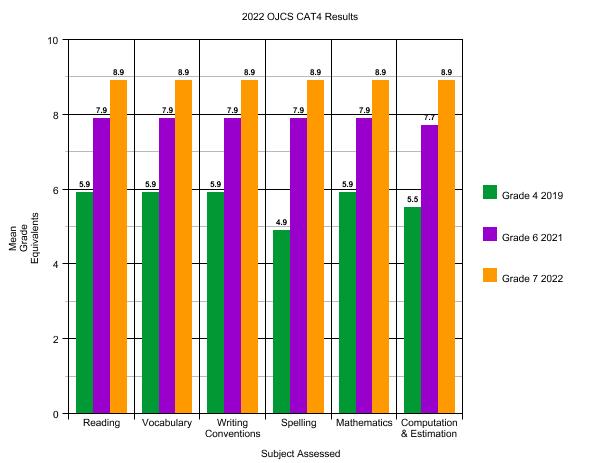

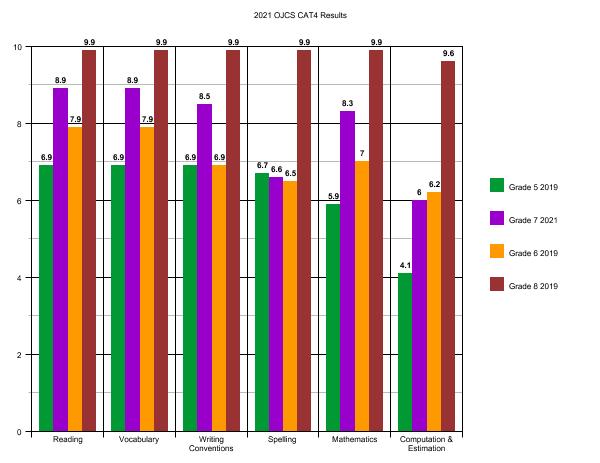

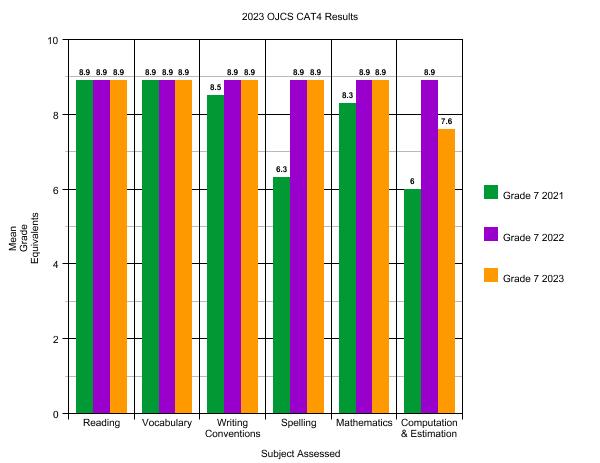

What can we learn from Grade 7 over time?

- Extremely high scores with reasonably high stability!

- We’ll keep an eye on “Computation & Estimation” which, although high the last two years, is a bit all over the place by comparison.

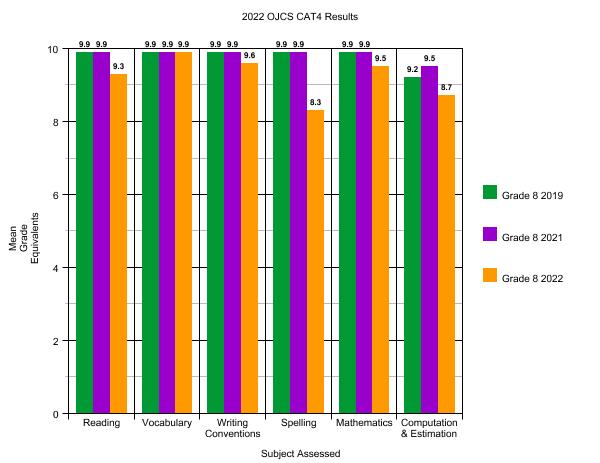

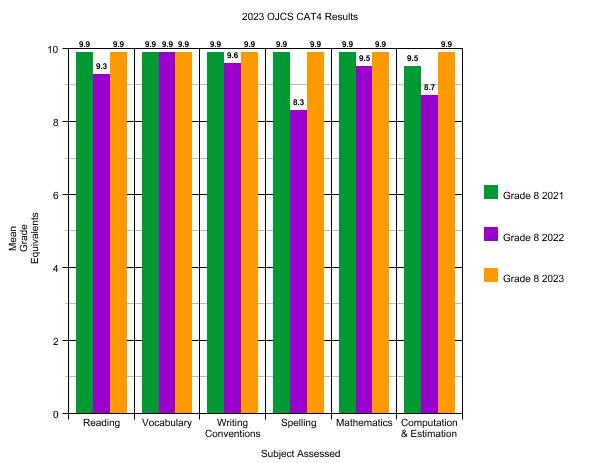

What can we learn from Grade 8 over time?

- Extremely high scores with high stability.

- We’ll need a few more years of data to speak more authoritatively, but a snapshot of where all our students are by their last year at OJCS has to reassuring for our current parents and, hopefully, inspiring to all those who are considering how OJCS prepares its graduates for high school success.

Current Parents: CAT4 reports will be timed with report cards and Parent-Teacher Conferences. Any parent for whom we believe a contextual conversation is a value add will be folded into conferences.

The bottom line is that our graduates – year after year – successfully place into the high school programs of their choice. Each one had a different ceiling – they are all different – but working with them, their families and their teachers, we successfully transitioned them all to the schools (private and public) and programs (IB, Gifted, French Immersion, Arts, etc.) that they qualified for.

And now again this year, with all the qualifications and caveats, our CAT*4 scores continue to demonstrate excellence. Excellence within the grades and between them.

Not a bad place to be as we enter the second week of the 2024-2025 enrollment season…with well over 50 families already enrolled.