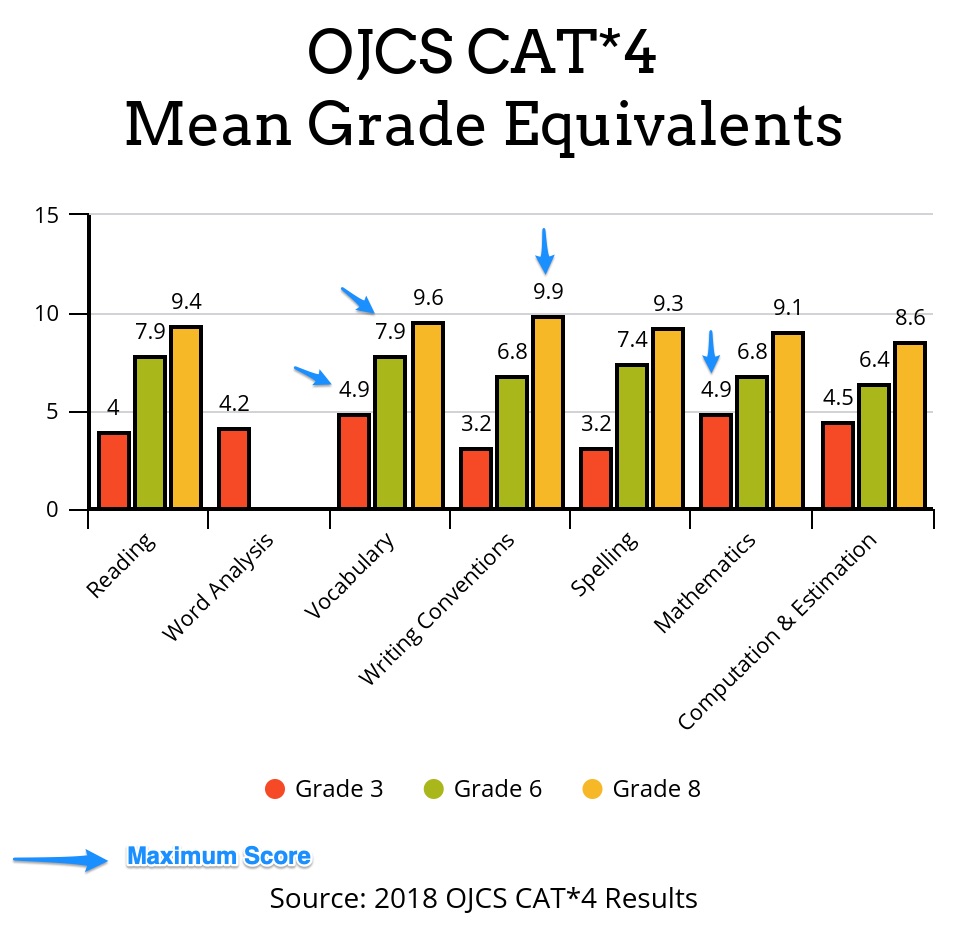

From October 29th-31st, students at the Ottawa Jewish Community School in Grades 3, 6 and 8 will be writing the Fourth Edition of the Canadian Achievement Tests (CAT- 4). The purpose of this test is to inform instruction and programming for the 2018-2019 school year, and to measure our students’ achievement growth over time.

Seems pretty non-controversial, eh?

These days, however, the topic of “standardized testing” has become a hot topic. So with our testing window ready to open next week, this feels like a good time to step back and clarify why we take this test and how we intend to use and share the results. But first, two things that are new this year:

- We moved our test window from the spring to the fall to align ourselves with other private schools in our community. This will be helpful for comparison data. (This is also why we didn’t take them last year.)

- We have expanded the number of grades taking the test. We have not yet decided whether that number will expand again in future years.

What exactly is the value of standardized testing and how do we use the information it yields?

It sounds like such a simple question…

My starting point on this issue, like many others, is that all data is good data. There cannot possibly be any harm in knowing all that there is to know. It is merely a question of how to best use that data to achieve the fundamental task at hand – to lovingly move a child to reach his or her maximum potential. [North Star Alert! “We have a floor, but not a ceiling.”] To the degree that the data is useful for accomplishing this goal is the degree to which the data is useful at all.

Standardized tests in schools that do not explicitly teach to the test nor use curriculum specifically created to succeed on the tests – like ours – are very valuable snapshots. Allow me to be overly didactic and emphasize each word: They are valuable – they are; they really do mean something. And they are snapshots – they are not the entire picture, not by a long shot, of either the child or the school. Only when contextualized in this way can we avoid the unnecessary anxiety that often bubbles up when results roll in.

Like any snapshot, the standardized test ought to resemble its object. The teacher and the parent should see the results and say to themselves, “Yup, that’s him.” It is my experience that this is the case more often than not. Occasionally, however, the snapshot is less clear. Every now and again, the teacher and/or the parent – who have been in healthy and frequent communication all the year long – both look at the snapshot and say to themselves, “Who is this kid?”

When that happens and when there is plenty of other rich data – report cards, prior years’ tests, portfolios, assessments, etc. and/or teacher’s notes from the testing which reveal anxiety, sleepiness, etc. – it is okay to decide that someone put their thumb on the camera that day (or that part of the test) and discard the snapshot altogether.

Okay, you might say, but besides either telling us what we already know or deciding that it isn’t telling us anything meaningful, what can we learn?

Good question!

Here is what I expect to learn from standardized testing in our school over time if our benchmarks and standards are in alignment with the test we have chosen to take:

Individual Students:

Do we see any trends worth noting? If the overall scores go statistically significantly down in each area test after test that would definitely be an indication that something is amiss (especially if it correlates to grades). If a specific section goes statistically significantly down test after test, that would be an important sign to pay attention to as well. Is there a dramatic and unexpected change in any section or overall in this year’s test?

The answers to all of the above would require conversation with teachers, references to prior tests and a thorough investigation of the rest of the data to determine if we have, indeed, discovered something worth knowing and acting upon.

This is why we will be scheduling individual meetings with parents in our school to personally discuss and unpack any test result that comes back with statistically significant changes (either positive or negative) from prior years’ testing or from current assessments.

Additionally, the results themselves are not exactly customer friendly. There are a lot of numbers and statistics to digest, “stanines” and “percentiles” and whatnot. It is not easy to read and interpret the results without someone who understands them guiding you. As the educators, we feel it is our responsibility to be those guides.

Individual Classes:

Needless to say, if an entire class’ scores took a dramatic turn from one test to the next it would be worth paying attention to – especially if history keeps repeating. To be clear, I do not mean the CLASS AVERAGE. I do not particularly care how the “class” performs on a standardized test qua “class”. [Yes, I said “qua” – sometimes I cannot help myself.] What I mean is, should it be the case that each year in a particular class each student‘s scores go up or down in a statistically significant way – that would be meaningful to know. Because the only metric we concern ourselves with is an individual student’s growth over time – not how s/he compares with the “class”.

That’s what it means to cast a wide net (admissions) while having floors, but no ceilings (education).

School:

If we were to discover that as a school we consistently perform excellently or poorly in any number of subjects, it would present an opportunity to examine our benchmarks, our pedagogy, and our choice in curriculum. If, for example, as a Lower School we do not score well in Spelling historically, it would force us to consider whether or not we have established the right benchmarks for Spelling, whether or not we teach Spelling appropriately, and/or whether or not we are using the right Spelling curriculum.

Or…if we think that utilizing an innovative learning paradigm is best for teaching and learning then we should, in time, be able to provide evidence from testing that in fact it is. (It is!)

We eagerly anticipate the results to come and to making full use of them to help each student and teacher continue to grow and improve. We look forward to fruitful conversations.

That’s what it means to be a learning organization.