[NOTE: This is an extended version of an email sent this week to all parents in Grades 6-8. I share it here as it likely will be of interest to our full OJCS community and possibly some of our fellow-travelers on the journey of schools.]

Where the future of Jewish day school is debated, explored and celebrated.

[NOTE: This is an extended version of an email sent this week to all parents in Grades 6-8. I share it here as it likely will be of interest to our full OJCS community and possibly some of our fellow-travelers on the journey of schools.]

It feels like each year there is something from the outside world that warrants an explanation as to why this year’s Teacher Appreciation Week is worthy of your added attention. Whether it was COVID in prior years or October 7th in this one, the job of being a teacher has only gotten more complicated…and more important. And, of course, here at OJCS what with the relocation and the renovation underway, this year all the more so…

Teachers are not infallible. Teachers make mistakes. Teachers can do the wrong thing. A hopeful return to giving teachers the benefit of the doubt won’t mean blind faith. Giving teachers the benefit of the doubt doesn’t mean parents shouldn’t advocate for their children. Giving teachers the benefit of the doubt doesn’t meant that sometimes parents don’t have a better solution to an issue than their teachers. The best of schools foster healthy parent-teacher relationships explicitly because of these truths. Both partners are required to produce the best results. But somewhere in between my time as a student to my time as an educator, the culture changed. Respect for teachers went from being automatic to being earned to being ignored.

How about this year, let’s assume the best of our teachers – even when they have difficult truths to share. Let’s give them the benefit of the doubt – even when they don’t communicate as well as they could. Let’s treat them as partners – even when they make mistakes. Let’s not simply tell our teachers that we appreciate them; let’s actually appreciate them.

Looking for ideas?

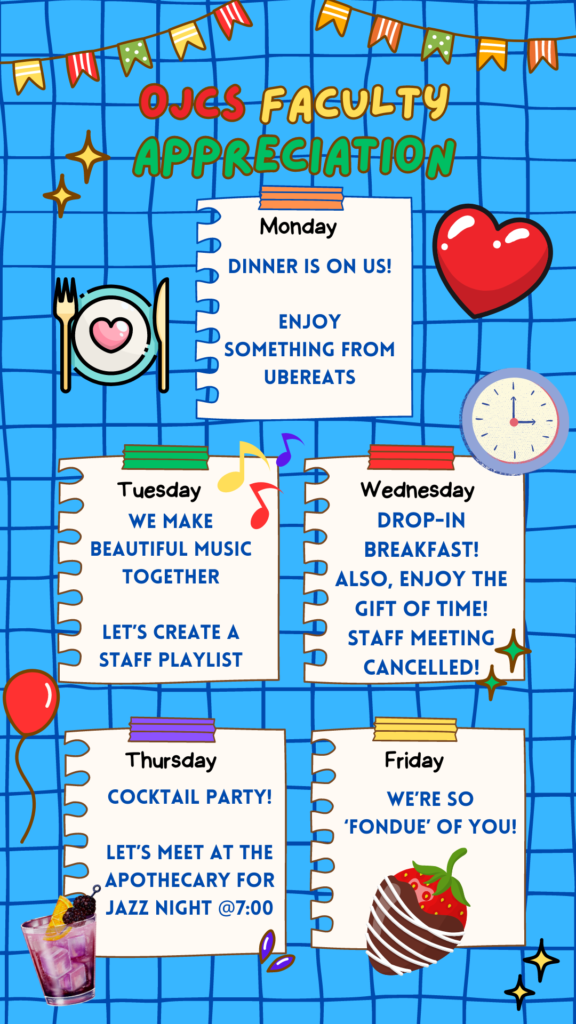

Here is what we will be doing for our teachers as a school:

How about you?

Pump up this great “Teacher Appreciation Week” playlist, pick an item from below (aggregated from lots of blog posts) and make a teacher’s day:

I look forward to sharing results from the Annual Parent Survey later this month. If you have NOT yet contributed and you want your results included, please fill yours out by Monday, May 15th. Please and thank you!

Yes, it is April and, yes, it is snowing.

If you can’t call a snow day and cuddle up with a good book in front of the fire, you could do the next best thing…cuddle up with a great set of student blogfolios and let the fire of their inspiration warm your soul.

I have not done this is a while, but because blogs and blogfolios do makeup the spine of which much else is built around; and because they are outward facing – available for you and the general public to read, respond and engage with – I do want to make sure that I keep them top of mind by seasonally (even when the seasons are all mixed up!) putting them back in front of parents, community and fellow travelers on the road of education.

For a significant portion of my professional life, I had two children in (my) schools where they maintained blogfolios. I subscribed to them, of course, but I am not going to pretend that I read each and every posting, and certainly not at the time of publication. So whenever I do this, please know that it is never about shaming parents or relatives whose incredibly busy lives makes it difficult to read each and every post. As the head of a school where blogfolios are part of the currency, I try to set aside time to browse through and make comments – knowing that each comment gives each student a little dose of recognition and a little boost of motivation. But I am certainly not capable of reading each and every post from each and every student and teacher!

When I am able to scroll through, what I enjoy seeing the most is the range of creativity and personalization that expresses itself through their aesthetic design, the features they choose to include (and leave out), and the voluntary writing. This is what we mean when we talk about “owning our own learning” and having a “floor, but not a ceiling” for each student. [North Star Alert!]

It is also a great example of finding ways to give our students the ability to create meaningful and authentic work. But, it isn’t just about motivation – that we can imagine more easily. When you look more closely, however, it is really about students doing their best work and reflecting about it. Look at how much time they spend editing. Look at how they share peer feedback, revise, collaborate, publish and reflect.

Our classroom blogs and student blogfolios are important virtual windows into the innovative and exciting work happening at OJCS. In addition to encouraging families, friends and relatives to check it out, I also work hard to inspire other schools and thought-leaders who may visit my blog from time to time to visit our school’s blogosphere so as to forge connections between our work and other fellow-innovators because we really do “learn better together” [North Star Alert!]

So please go visit our landing page for OJCS Student Blogfolios. [Please note that due to privacy controls that some OJCS students opt for avatars instead of utilizing their first names / last initials which is our standard setting. That may explain some of the creative titles. Others opt for password protected accounts and a small number remain entirely private.]

Seriously go! I’ll wait…

…

…

English, French and Hebrew; Language Arts, Science, Math, Social Studies, Jewish Studies; Art, Music, PE, and Student Life and so much more…our students are doing some pretty fantastic things, eh?

I will continue to encourage you to not only check out all the blogs on The OJCS Blogosphere, but I strongly encourage you to offer a quality comment of your own – especially to our students. Getting feedback and commentary from the universe is highly motivating…

I was happy to be a guest on a colleague’s podcast last week and it just so happened that blogs and blogfolios became a big part of the conversation! If you are interested…check it out:

Yes, if you are a current parent in our school, you know that we celebrated “Innovation Day” before we celebrated Purim, but my blog has flipped the order. (I really wanted to get my Purim post out before Purim.) That does not mean that we didn’t have an AMAZING “Innovation Day” worth sharing more broadly with our community. The opposite…we had a GREAT day.

I want to be super clear and name that not only did I have virtually nothing to do with the planning and facilitation of this day, I also had virtually nothing to do with the documentation of this day as well. It is my pleasure to use my blog to showcase the work of those who did.

The primary drivers of Innovation Day at OJCS were Josh Ray, who serves as our Makerspace Lead and Middle School Science Teacher, and our Lower School Science Teachers. Everything that you are going to see below is the fruit of their labours – with photo collages captured by Lianna Krantzberg, who dabbles in social while serving as our Student Life Lead. Together with other special events such as Global Maker Day, the JNF Hackathon, the Global Student Showcase, along with regularly scheduled lessons in our Makerspace, Innovation Day shows how OJCS serves as an incubator of innovation for it students (and teachers!).

So. What was this day all about?

Grade 8 – Simple Machines Project

We often say that doing something with a machine requires less work. In this design challenge, you will be responsible for helping design a new sled for students at OJCS to use. Using as many of the six simple machines you learned about in class, your task is to use the design thinking process to design, test, and build a simple machine sled prototype that students can safely use. You will give your innovative sled a name and create a pitch to market your sled to potential investors. May the best sled win!

Your Goal: Working on your own or with a partner, decide which simple machines will be part of your sled design. After researching the six different types of simple machines and realistic DIY sled builds, create a plan for your prototype. Determine what materials you will need and the size and quantity of materials. You may use any materials from home and supplement any other necessary materials from Home Depot. Then, plan how you will proceed. All sections will be presented as part of a 5-minute product pitch that must include the following 5 sections (Empathize, Define, Ideate, Prototype, Test). The pitch will include all information displayed on a tri-fold board presented in front of investors (judges) and will be graded based on the judging rubric.

Grade 7 – Animal Structures Project

All animals, like people, require shelter to protect them from the harsh elements of nature as well as predators. In this design challenge, you will take on the role of an engineer and be responsible for helping design an innovative structure for an animal of your choosing. Using the information about stability, forces, symmetry, and structure types you learned about in class, your task is to use the design thinking process to design a new shelter prototype that animals can safely use using the CoSpaces VR program. You will give your innovative shelter a name and create a pitch to market your structure to potential investors. May the best structure win!

Your Goal: Working on your own or with a partner, decide which animal you would like to build a house/shelter for. After researching stability, forces, symmetry, and structure types as well as the current animal shelters for your chosen animal on the market, you will create a plan for your prototype. Using the Five Freedoms as a guide, determine what structure type you are going to construct, what materials your structure will need, and what strategies you will use to provide stability. You will also need to determine what forces (internal/external) your structure will need to withstand and describe what techniques you used to provide strength and avoid structural failure. You will include this information in your structure design using the virtual reality CoSpaces program for potential investors to see. All sections of the Design Thinking Process will be presented as part of a 5-minute product pitch that must include the following 5 sections (Empathize, Define, Ideate, Prototype, Test). The pitch will include all information displayed on a tri-fold board presented in front of investors (judges) and will be graded based on the judging rubric.

Grade 6 – Electricity Project

Your Goal: In this project, you will build a series circuit that lights a bulb using a power source and conducting wires. Then predict what will happen to the brightness of your bulb if you add more bulbs or batteries to your series circuit, and test your prediction.

All sections will be presented as part of a Google Slides (or equivalent) presentation with each slide representing each step in the Scientific Method.

Grade 5

Using the design thinking framework, the students answered the question: How can we provide electricity to community outdoor spaces in an energy efficient way that still maintains the safety and “fun” aesthetic of the space?

Grade 4

Grade 3

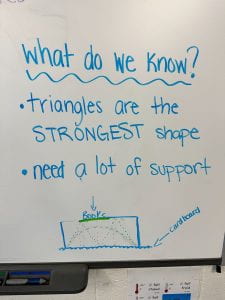

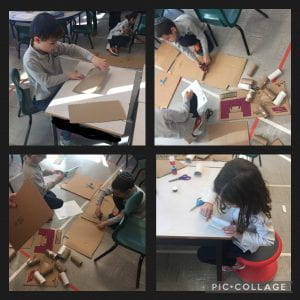

We have jumped right into our Strong and Stable Structures unit in preparation for Innovation Day: 1.) Assess effects on society and the environment of strong and stable structures. 2.) Assess the environmental impact of structures built by various animals, including structures built by humans.

Will the bridges be able to hold up THREE heavy textbooks!?

Grade 2

Grade One

Students are keeping busy with their projects for Innovation Day. They are really putting in the effort, collaborating and designing their projects to make them the best they can be. Building something from scratch can be a real challenge, but it’s also a great opportunity to learn new skills and collaborate with others.

Kindergarten

Thank you so much to everyone who was able to join us for Innovation Day in KA and KB on Wednesday!! The students worked so hard to create their flying machines and to learn about the 4 forces of flight. They were so proud to share what they had learned and created with you. You can watch and listen to the “What Makes Airplanes Fly?” song here and check out the pictures of the process of creating and testing their bamboo helicopters, hovercrafts, and hoop gliders…

Junior Kindergarten

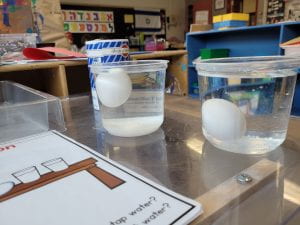

A huge ‘kol ha’kavod’ to JK for their first innovation day! What a fun morning! Thank you to all of our guests for popping in to experiment with us!

Leading up to this morning, JK was heavily invested in learning about what makes objects sink or float. They learned about density, and even conducted an eggsperiment – no, wait, an experiment – to change the density of water so that an object that sank (an egg haha) was now able to float! Check out the booklets your children brought home in their backpacks to re-create the experiment at home! Their main challenge was to build a popsicle stick raft to get their dinosaur across the water table. As the joke goes… Why did the dinosaur cross the river? To get to the other side.

Did our students have an amazing day putting all their passion, talent, knowledge and creativity to good use?

I’d say “yes” – this was a great day of learning at OJCS!

We are back after February Break and are in that special sprint towards Passover Break – with a calendar chock full of ruach. Let’s take a peek forward in anticipation of what should be a very exciting week at the Ottawa Jewish Community School. Let me welcome you to the Second Annual La célébration de la semaine de la Francophonie, featuring our third annual Francofête. [For a bit of background, you are welcome to revisit last year’s post.]

We are so pleased to let you know that next week (March 4-8) will be “La célébration de la semaine de la Francophonie 2024”! The goals are simple – to spend a week marinating in French, celebrating the work of our students and teachers, highlighting the strides our French program has taken in the last few years, and elevating French beyond the boundaries of French class, into the broader OJCS culture. The highlight will be Francofête on Thursday, March 7th at 6:30 PM in the OJCS Gym.

So…what to expect from “La célébration de la semaine de la Francophonie 2024”?

And many more surprises…

So there you go…voilà!

Parents at OJCS will hopefully look forward to lots of opportunities to peek in and/or to see pictures and videos during this year’s celebration and to join us for the Francofête. We’ll look forward to building on this in future years as we continue to showcase French in our trilingual school.

Great appreciation to our entire French Faculty, and to Madame Wanda in particular, who has led this year’s celebration. This should be a week filled with ruach – errr…joie de vivre! [North Star Alert! En Français!]

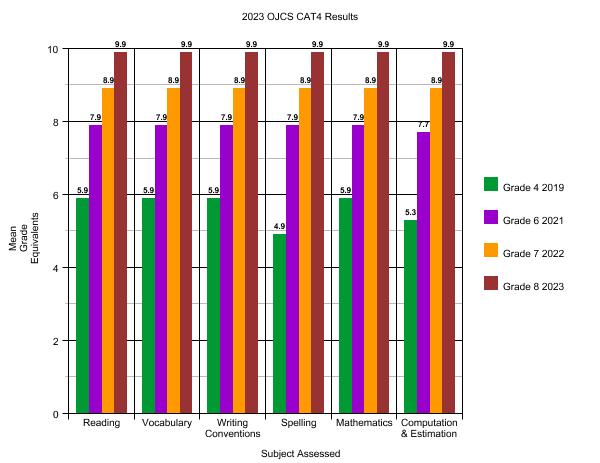

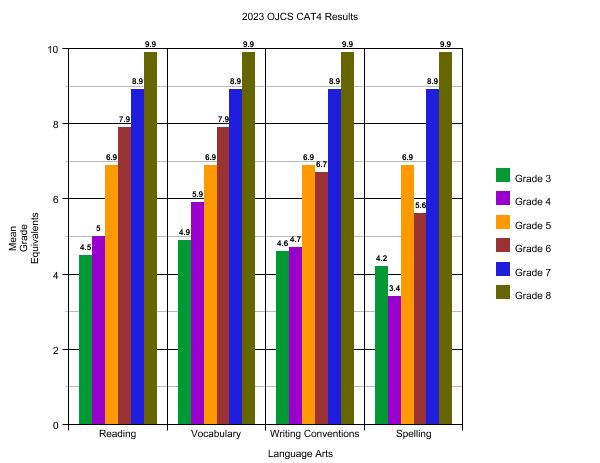

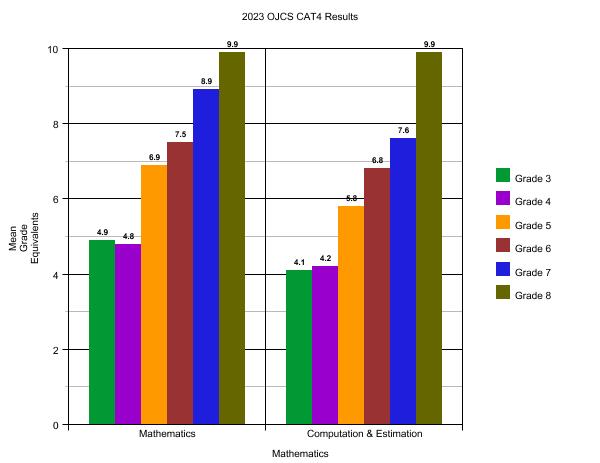

Welcome to “Part III” of our analysis of this year’s CAT4 results!

In Part I, we provided a lot of background context and shared out the simple results of how we did this year. In Part II, we began sharing comparative data, focusing on snapshots of the same cohort (the same children) over time. Remember that it is complicated because of four factors:

In the future, that part (“Part II”) of the analysis will only grow more robust and meaningful. We also provided targeted analysis based on cohort data.

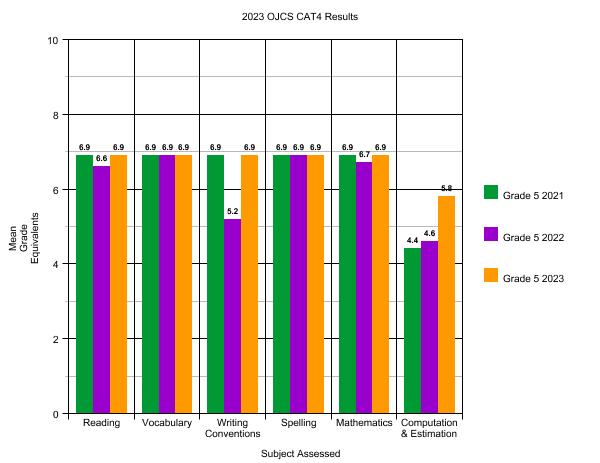

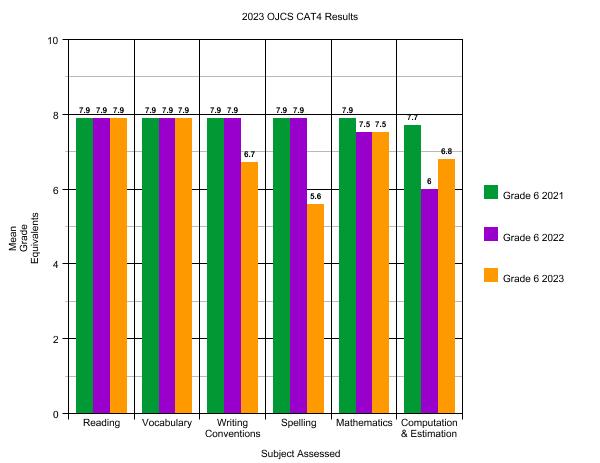

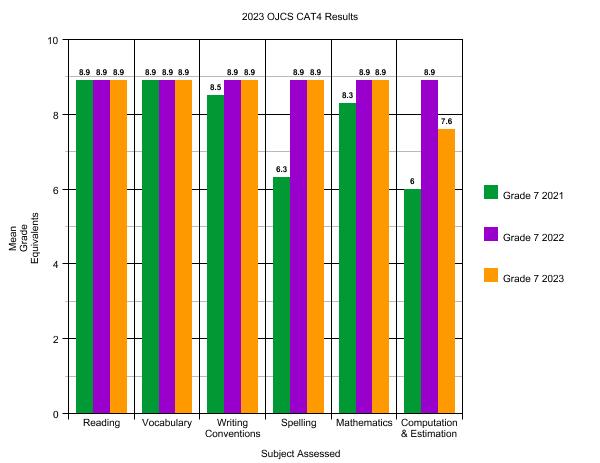

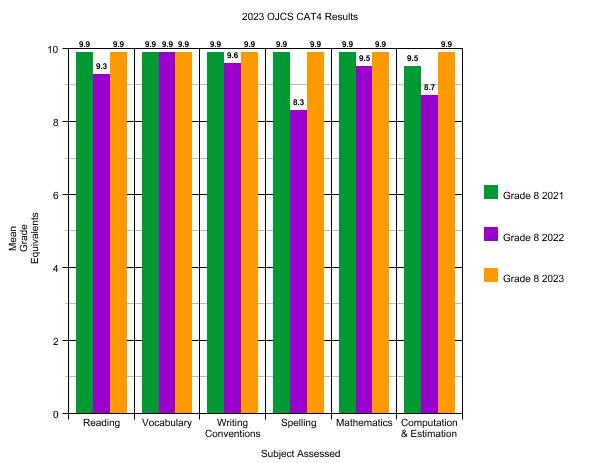

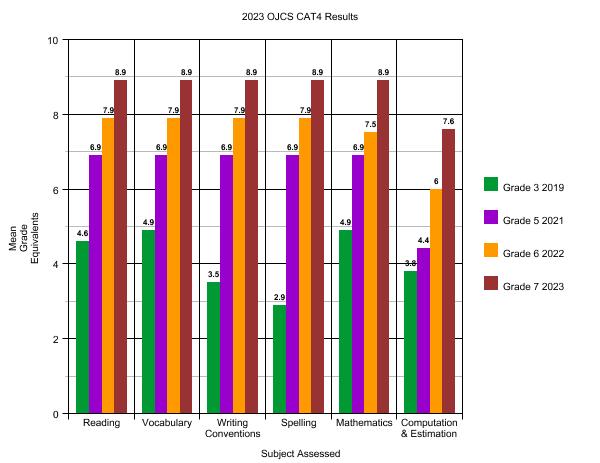

Here, in Part III, we will finish sharing comparative data, this time focusing on snapshots of the same grade (different groups of children). Because it is really hard to identify trends while factoring in skipped years and seismic issues, unlike in Part II where we went back to 2019 for comparative purposes, we are only going focus on four grades that have multiyear comparative data post-COVID: Grades 5-8 from 2021, 2022, and 2023.

Here is a little analysis that will apply to all four snapshots:

Here are the grade snapshots:

What can we learn from Grade 5 over time?

What can we learn from Grade 6 over time?

What can we learn from Grade 7 over time?

What can we learn from Grade 8 over time?

Current Parents: CAT4 reports will be timed with report cards and Parent-Teacher Conferences. Any parent for whom we believe a contextual conversation is a value add will be folded into conferences.

The bottom line is that our graduates – year after year – successfully place into the high school programs of their choice. Each one had a different ceiling – they are all different – but working with them, their families and their teachers, we successfully transitioned them all to the schools (private and public) and programs (IB, Gifted, French Immersion, Arts, etc.) that they qualified for.

And now again this year, with all the qualifications and caveats, our CAT*4 scores continue to demonstrate excellence. Excellence within the grades and between them.

Not a bad place to be as we enter the second week of the 2024-2025 enrollment season…with well over 50 families already enrolled.

Welcome to “Part II” of our analysis of this year’s CAT*4 results!

In last week’s post, we provided a lot of background context and shared out the simple results of how we did this year. Here, in our second post, we are now able to begin sharing comparative data, focusing on snapshots of the same cohort (the same children) over time. It is complicated because of three factors:

This means that there are only five cohorts that have comparative data – this year’s Grades 4-8. And only two of those cohorts have comparative data beyond two years – this year’s Grades 7-8. It is hard to analyze trends with without multiple years of data, but we’ll share what we can.

Here is a little analysis that will apply to all five snapshots:

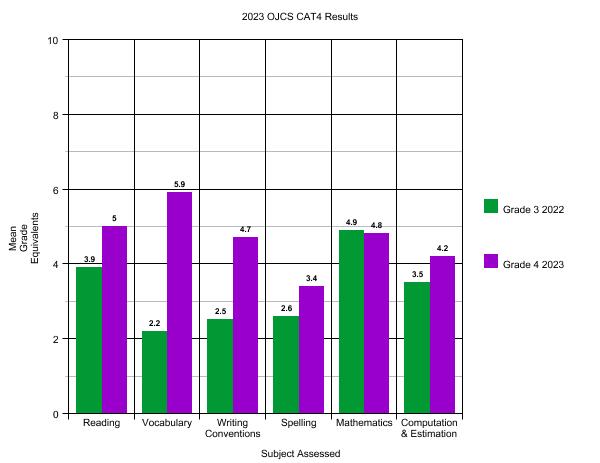

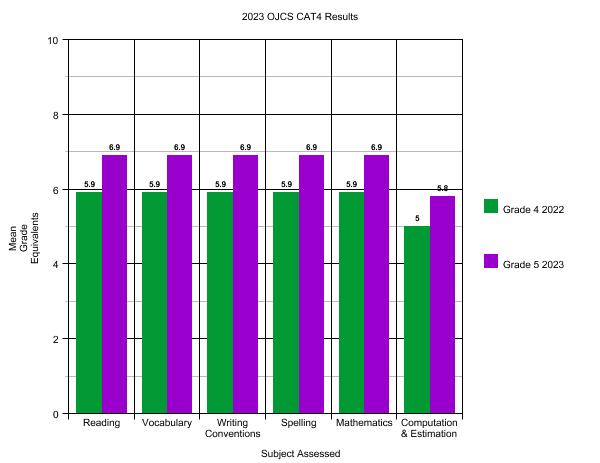

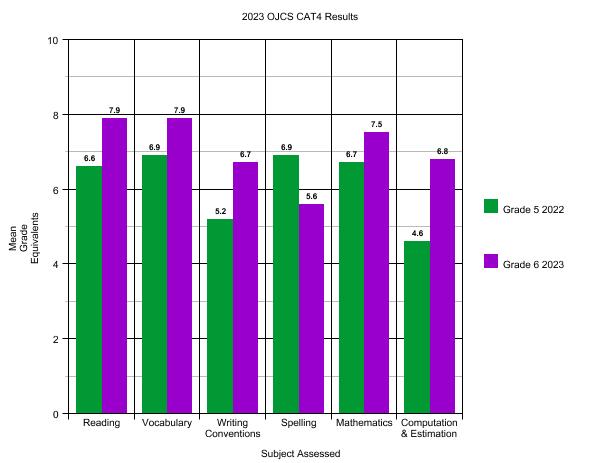

Here are the cohort snapshots:

What does this snapshot of current Grade 4s reveal?

What does this snapshot of current 5s reveal?

What does this snapshot of current Grade 6s reveal?

What does this snapshot of current Grade 7s reveal?

No analysis of current Grade 8s needed, just appreciation for three years of near perfection. Not a bad advertisement for OJCS Middle School.

To sum up this post, we have so much to be proud of in the standardized test scores of these particular cohorts over time. The Math and Language Arts Teachers in Grades 3-8 have now begun meeting to go through their CAT*4 results in greater detail, with an eye towards what kinds of interventions are needed now – in this year – to fill any gaps (both for individual students and for cohorts); and how might we adapt our long-term planning to ensure we are best meeting needs. Parents will be receiving their child(ren)’s score(s) soon and any contextualizing conversations will be folded into Parent-Teacher Conferences.

Stay tuned next week for the concluding “Part III” when we will look at the same grade (different students) over time, see what additional wisdom is to be gleaned from that slice of analysis, and conclude this series of posts with some final summarizing thoughts.

[Note from Jon: If you have either read this post annually or simply want to jump to the results without my excessive background and contextualizing, just scroll straight to the graph. Spoiler alert: These are the best results we have ever had!]

Each year I fret about how to best facilitate an appropriate conversation about why our school engages in standardized testing (which for us, like many independent schools in Canada, is the CAT*4, but next year will become the CAT*5), what the results mean (and what they don’t mean), how it impacts the way in which we think about “curriculum” and, ultimately, what the connection is between a student’s individual results and our school’s personalized learning plan for that student. It is not news that education is a field in which pendulums tend to wildly swing back and forth as new research is brought to light. We are always living in that moment and it has always been my preference to aim towards pragmatism. Everything new isn’t always better and, yet, sometimes it is. Sometimes you know right away and sometimes it takes years.

The last few years, I have taken a blog post that I used to push out in one giant sea of words, and broke it into two, and now three parts, because even I don’t want to read a 3,000 word post. But, truthfully, it still doesn’t seem enough. I continue to worry that I have not done a thorough enough job providing background, research and context to justify a public-facing sharing of standardized test scores. Probably because I haven’t.

And yet.

With the forthcoming launch of Annual Grades 9 & 12 Alumni Surveys and the opening of the admissions season for the 2024-2025 school year, it feels fair and appropriate to be as transparent as we can about how well we are (or aren’t) succeeding academically against an external set of benchmarks, even as we are still facing extraordinary circumstances. [We took the text just a couple of weeks after “October 7th”.] That’s what “transparency” as a value and a verb looks like. We commit to sharing the data and our analysis regardless of outcome. We also do it because we know that for the overwhelming majority of our parents, excellence in secular academics is a non-negotiable, and that in a competitive marketplace with both well-regarded public schools and secular private schools, our parents deserve to see the school’s value proposition validated beyond anecdotes.

Now for the annual litany of caveats and preemptive statements…

We have not yet shared out individual reports to our parents. First our teachers have to have a chance to review the data to identify which test results fully resemble their children well enough to simply pass on, and which results require contextualization in private conversation. Those contextualizing conversations will take place in the next few weeks and, thereafter, we should be able to return all results.

There are a few things worth pointing out:

Grade-equivalent scores attempt to show at what grade level and month your child is functioning. However, grade-equivalent scores are not able to show this. Let me use an example to illustrate this. In reading comprehension, your son in Grade 5 scored a 7.3 grade equivalent on his Grade 5 test. The 7 represents the grade level while the 3 represents the month. 7.3 would represent the seventh grade, third month, which is December. The reason it is the third month is because September is zero, October is one, etc. It is not true though that your son is functioning at the seventh grade level since he was never tested on seventh grade material. He was only tested on fifth grade material. He performed like a seventh grader on fifth grade material. That’s why the grade-equivalent scores should not be used to decide at what grade level a student is functioning.

Let me finish this section by being very clear: We do not believe that standardized test scores represent the only, nor surely the best, evidence for academic success. Our goal continues to be providing each student with a “floor, but no ceiling” representing each student’s maximum success. Our best outcome is still producing students who become lifelong learners.

But I also don’t want to undersell the objective evidence that shows that the work we are doing here does in fact lead to tangible success. That’s the headline, but let’s look more closely at the story. (You may wish to zoom in a bit on whatever device you are reading this on…)

A few tips on how to read this:

What are the key takeaways from these snapshots of the entire school?

It does not require a sophisticated analysis to see how exceedingly well each and every grade has done in just about each and every section. In almost all cases, each and every grade is performing significantly above grade-level. This is a very encouraging set of data points.

Stay tuned next week when we begin to dive into the comparative data. “Part II” will look at the same cohort (the same group of students) over time. “Part III” will look at the same grade over time and conclude this series of posts with some additional summarizing thoughts.

Once upon a time all the high schools in our community – both public and private – gave formal exams in Grade 9. And so it was not only natural, it was an advantage for students at OJCS to take a series of exams during the Grades 7 and 8 years. It checked (at least) three meaningful boxes:

And then…say it with me…COVID.

And ever since, the public high schools have not offered exams in Grades 9 & 10 and do not seem to be on a path towards doing so again. Private schools in our community do offer exams in Grade 9. And to the degree that context matters, we did some digging and it is additionally true that other independent schools in our community do offer exams in Grade 8 (or even earlier) and so if that is the water we are swimming in, perhaps it is that simple. But part of being “independent” is that we get to make the decision for ourselves, and so it begs the question about what ought we do at OJCS if one of our three boxes no longer applies? Do the other two warrant the energy (and for some students the anxiety) for OJCS to continue to offer exams, and if so, in which grades and subjects?

Zooming out, there are lots of skills and experiences we teach and provide at OJCS that are not necessarily formally carried forward to high school. I have learned this firsthand as a parent of two OJCS graduates, one now in university and one still in high school. Those skills – whether they be technological, organizational, public speaking, self-advocacy and many others – may not have had direct application to this (high school) class or another, but have definitely served them well as students. If we were deciding whether or not to use iPads, or host hackathons, or a million other things based on what will be true in public school in grade nine, we might as well be public school ourselves. So we feel very comfortable suggesting that whether or not our graduates going on to public schools do or don’t have formal exams in grade nine, it ought not determine what we do. So much for “Box #3”.

Boxes #1 & 2 still feel very valuable. While always managing and paying attention to student anxiety and their version of “school/life balance” – and always honouring IEPs and Support Plans – we definitely believe that the process of preparing, studying and taking formal exams is a value add for our students as they prepare for the added rigours of high school. Grit and resiliency can only come about through authentic experience; sometimes you have to be a little uncomfortable, suffer a little adversity, be a little anxious. So there’s “Box #1”.

Box #2 is interesting and at least for this year (and likely next) determinative. We have lots of opportunities to utilize external benchmarks and standardized testing to provide data on what students who are graduating OJCS have (and haven’t) learned. We have the most data on Math and Language Arts by virtue of the CAT-4, Amplify, IXL, etc. If we wanted to gather similar results for Social Studies and/or Science we could decide if and when to add those modules to our CAT-4. The two places where we could benefit from better knowledge is in Jewish Studies and French. We have made significant progress in knowing what is true in French with last year’s introduction of the DELF Exam, but it only targeted the highest achieving students. No such external standard exists for Hebrew / Jewish Studies.

And so for all of the above reasons, here is what will be true this Spring at OJCS. Students in Grade 8 will take two exams. They will all take a Jewish Studies Final (which is completely consistent with past and present practice) and they will take either a French Final or the DELF (the “French Final” being an in-house exam offered at both the Core and Extended (if needed) levels). We’ll see how that goes, check results, solicit feedback and make any adjustments if needed for future years.

And with this totally normal little blog post in the middle of what is still a very complicated world and time…Winter Break. See you 2024.

We’re back!

This has been an amazing Faculty Pre-Planning Week that has us poised for our biggest and best year yet! Our teachers consist of one group of amazing returning teachers, and another group of talented new teachers, and the combination is magical. A school is only as good as its teachers, so…OJCS is in good hands, with all arrows pointing up. Enrollment is still coming in, and I can safely say that we will be a larger school than the year before for the sixth consecutive school year.

Do you ever wonder how we spend this week of preparations while y’all are busy getting your last cottage days or summer trips or rays of sun in?

I think there is value in our parents (and community) having a sense for the kinds of issues and ideas we explore and work on during our planning week because it foreshadows the year to come. So as you enjoy those last days on the lake or on the couch, let me paint a little picture of how we are preparing to make 2023-2024 the best year yet.

Here’s a curated selection from our activities…

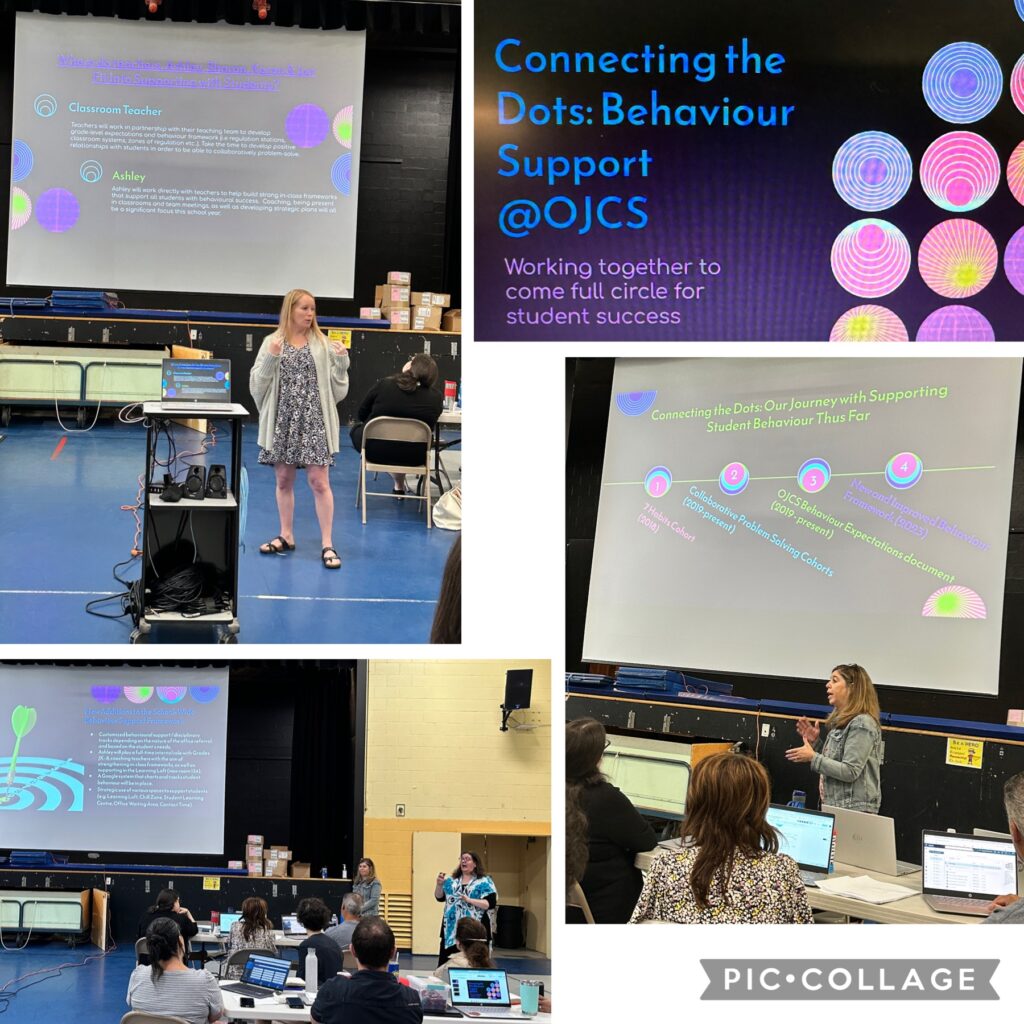

The “Connecting the Dots” Cafe

Each year (16 years, 7 at OJCS and counting!), I begin “Pre-Planning Week” with an updated version of the “World Café”. It is a collaborative brainstorming activity centered on a key question. Each year’s question is designed to encapsulate that year’s “big idea”. This year’s big idea? Connecting the Dots!

With a growing school with so many departments, languages, programs, etc., in order to make sure our students, teachers and parents are able to experience OJCS as holistic human beings and to benefit from all we have to offer, we will aim this year to forge the connections, break out of the silos, simplify and streamline where appropriate, facilitate the communication and do less even better.

Here’s what connected collaboration looks like…

Conscious Leadership

Get used to hearing your children locating themselves “above” or “below the line” as we introduced some key ideas from The 15 Commitments of Conscious Leadership – read this summer by the Admin – to our fuller faculty. Every now and again we introduce new “frameworks” that provide a shorthand, a vocabulary, and culture that allows our teachers and our students to make sense of themselves and the world. The big ideas of “Conscious Leadership” are completely anchored in our North Stars, what we believe to be true about children, the way we think and talk about “regulation”, and along with those other values and ideas, will continue to professionalize ourselves and upgrade our engagement with parents and students. Do you want to learn along with us? Check out the following and see if and how you might apply it to either your professional and/or parenting lives:

Next time you have to have a difficult conversation, just let us know if we are bringing you “below the line” and we can help make that positive “shift”.

Connecting the Dots: Behaviour Support @ OJCS

This will be big, the focus of attention at Back to School Night (9/19 @ 7:00 PM), and the subject of its own blog post in the weeks ahead, so please just consider this a “teaser”. But you should also “connect the dots” between what I wrote near the end last year in my post sharing the results of the Annual Parent Survey:

The one metric that I am disappointed to see take a dip down after three straight positive years is the last one, which essentially serves as a proxy for school-wide behavior management. Four years ago we scored a 6.69 and I stated that, “we are working on launching a new, school-wide behavior management system next year based on the “7 Habits” and anchored in our “North Stars”. I will be surprised if this score doesn’t go up next year.” Well, three years ago it came in at 7.65, two years it climbed up to 8.19, and it remained high at 7.85 last year. 6.73 puts at back at square one – even if it rounds into the acceptable range, and even with a small sample size. Parents at OJCS can expect to see significant attention being paid to overall behavior management in 2023-2024.

“Significant attention” has been and is being paid. You can see it reflected in staffing and you will see it reflected here. For now, remember…

…and know that…

…thanks to the hard work of a lot of people, our new framework is poised to make this our best year yet. Curious? Want to know more? Stay tuned!

Did I do one of my spiritual check-ins on the topic of the “Comfort & Community”? Sure did!

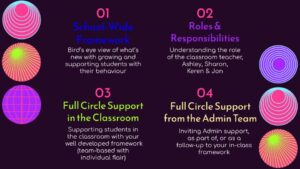

Did Mrs. Reichstein and Ms. Beswick lead a session on “Bringing the IEP to Life”?

Did Mrs. Bennett, Mr. Max, Mrs. Thompson and I provide differentiated instruction on best practices for Classroom Blogs & Student Blogfolios? Yessiree!

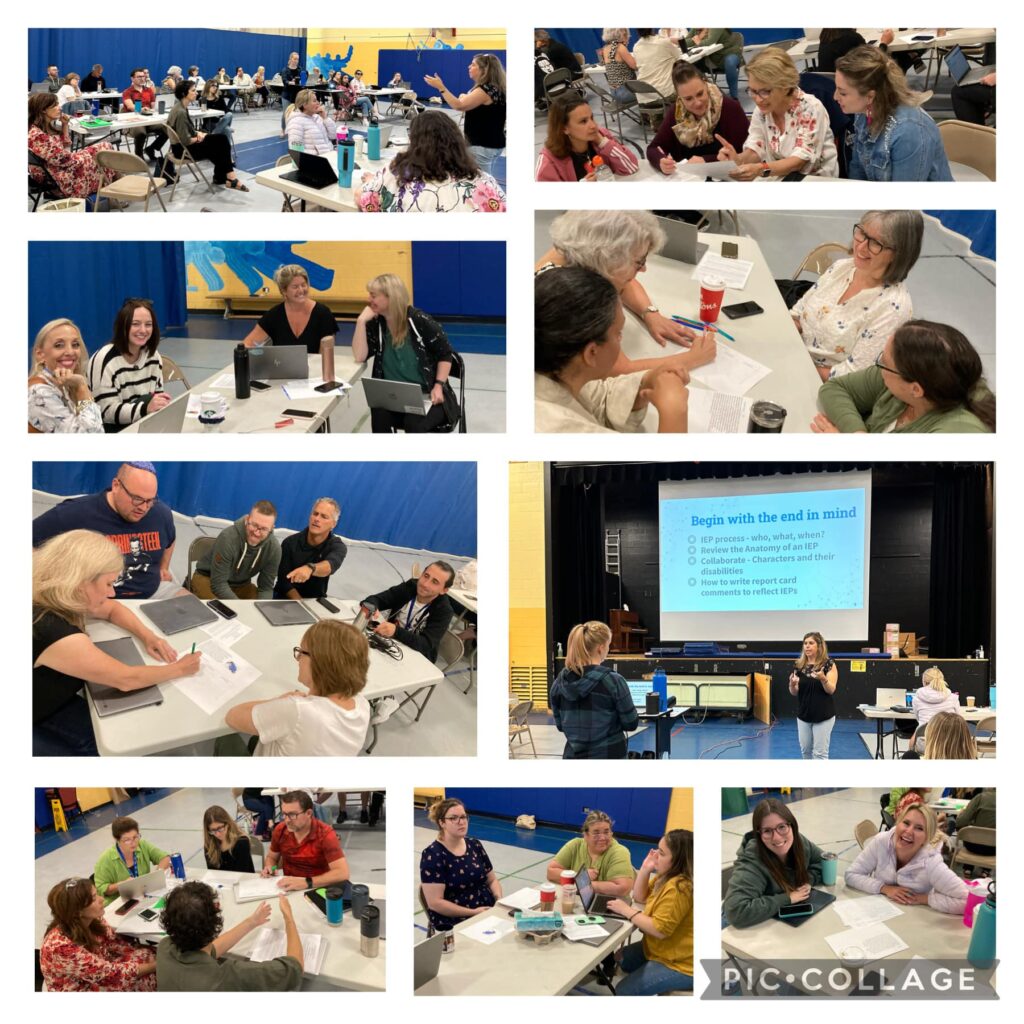

Did the OJCS Makerspace Team facilitate a hands-on creative session for teachers in the Makerspace now that it is becoming a hub for innovation at OJCS? (This work is a direct result of an Innovation Capacity Grant from the Jewish Federation of Ottawa!) Yup!

Did Ms. Gordon go over all the guidelines and protocols and procedures and rules and mandates to keep us all in the know? No doubt!

Did our teachers have lots of time to meet and prepare and collaborate and organize and do all the things needed to open up school on Tuesday? And then some!

All that and much more took place during this week of planning. We are prepared to provide a rigorous, creative, innovative, personalized, and ruach-filled learning experience for each and every one of our precious students who we cannot wait to greet in person on the first day of school!

Wishing you and yours a wonderful holiday weekend and a successful launch to the 2023-2024 school year…

BTW – want to hear from our own teachers about who they are and how excited they are for this year? Introducing our first podcast of the year… Meet the OJCS faculty! Give our podcast a listen and reply below to let us know what you are most excited about this year!